Michael Rubloff

Aug 23, 2024

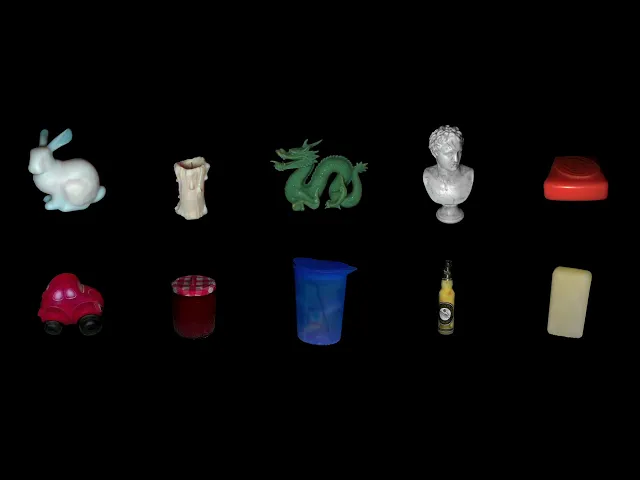

Have you ever spent time at a museum, carefully examining how light filters through a marble statue or observing the soft glow that radiates from a wax candle? These might seem like peculiar activities in isolation, but the faint, almost ethereal quality of light you notice is due to a phenomenon known as subsurface scattering (SSS). This occurs when light enters a material, like marble, scatters within its structure, and then exits from another part of the surface. It’s a complex process to understand, let alone simulate accurately in 3D rendering.

That’s where the new Subsurface Scattering 3D Gaussian Splatting (SSS 3DGS) method comes into play. You might recognize the researchers behind it from earlier this year when they introduced ControlNet for NeRFs, SIGNeRF. Now, they’ve tackled the challenge of modeling subsurface scattering in real-time, with impressive fidelity and interactive material editing capabilities.

The foundation of their method is standard 3D Gaussian Splatting, where an object's geometry and appearance are represented by a set of 3D Gaussians. Each Gaussian encodes key surface properties, such as position, orientation, scale, color, and opacity.

However, while 3D Gaussians effectively capture surface-level details, they struggle with the volumetric effects of light interacting beneath the surface of materials. To overcome this limitation, the researchers introduced an implicit neural network component to model these internal light interactions.

This neural network is a small multi-layer perceptron (MLP) designed to predict the radiance resulting from subsurface scattering. It processes input from each Gaussian—considering its position, normal, and the directions of incoming and outgoing light—and then calculates the scattered light that contributes to the final appearance of the object.

By combining this explicit surface model with the implicit volumetric model, the method captures the complex interactions of light within translucent materials, such as marble and wax, which are characterized by subsurface scattering.

The hybrid representation is optimized using a process called ray-traced differentiable rendering. This approach enables the system to refine both the explicit surface properties and the implicit SSS model simultaneously, resulting in photorealistic rendering that accurately captures both surface details and the subtle, soft lighting effects typical of materials with SSS.

However, the method initially struggled with rendering strong specular highlights. To address this, the researchers introduced a deferred shading technique. In deferred shading, the 3D Gaussians are first projected onto the image plane and rasterized to form an intermediate image representation. Instead of computing the final shading during this rasterization step, the method delays these calculations until after rasterization is complete. This means that shading is performed in image space rather than in the original 3D space, allowing for more precise and detailed rendering of specular highlights.

Excitingly, this method was trained on a single NVIDIA RTX 4090 GPU, which means that consumers could potentially use it on high-end, but still accessible, hardware when it becomes available. The final rendering frame rates exceed real-time requirements, reaching around 100 frames per second. Although the training process takes longer than traditional 3D Gaussian Splatting—about two hours—the fact that it can be done on consumer-grade hardware is a significant advantage.

The method builds on the original Inria 3DGS implementation, and given that SIGNeRF has already been integrated into Nerfstudio, there’s a possibility we might see this new approach follow suit. However, this may take some time, as the work is likely being submitted to a conference.

For more details, including access to the dataset and code, visit the project page at sss.jdihlmann.com. While the code isn’t available yet, the researchers have indicated on their GitHub page that it will be released around October of this year.

Trending Articles

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...