Michael Rubloff

Sep 9, 2025

At SIGGRAPH 2025, NVIDIA unveiled NuRec, its latest leap in neural reconstruction technology. Building on a rapid succession of breakthroughs, Instant-NGP in 2022, 3D Gaussian Splatting in 2023, and subsequent extensions like Gaussian ray tracing, NuRec signals another step toward merging real-world environments with simulation and robotics.

To better understand this moment, I sat down with Nick Schneider, NVIDIA’s Neural Reconstruction Manager, who joined the company after a decade in the automotive industry and a stint leading autonomous driving validation at TuSimple Robotics. Our conversation ranged from his first hands on work with radiance field representations to the role of standardization, robotics, and simulation in shaping what he calls “a future where everything that moves should be autonomous.”

Interview

Michael: Nick, thank you for joining me today at SIGGRAPH. NVIDIA just announced NuRec during the special address, and I’m excited to dive into it with you.

Nick: Thanks, Michael. It’s great to be here.

Michael: SIGGRAPH has always been central to the development of radiance field representations, and it’s exciting to see how much has emerged here. Just a couple of years ago, Instant-NGP won Best Paper at SIGGRAPH, then the following year 3D Gaussian Splatting won. Now we’re here with the announcement of NuRec. Do you remember when you first encountered radiance field representations? Was it NeRF, Instant-NGP, or something else?

Nick: My background is a bit different. Before joining NVIDIA, I spent over ten years in the automotive industry. In 2023, I was at TORC Robotics, leading the team responsible for validating our autonomous driving stack.

That’s a difficult challenge—when you’re running 40-ton trucks, mistakes can be scary. We needed better simulation, but most conventional simulators weren’t good enough. Our AI-based software didn’t behave the same way in simulation as it did in the real world.

So, we explored new methods. We began with NeRFs but quickly moved to Gaussian Splatting because we needed speed in our pipeline and the ability to composite different assets. That was my first hands-on experience developing with NeRFs and Gaussian Splatting for simulation.

Michael: The pace of progress has been remarkable. After 3D Gaussian Splatting, NVIDIA introduced Gaussian ray tracing and then the Gaussian Unscented Transform. Why is something like 3DGUT so valuable for simulation?

Nick: Great question. With 3D Gaussian Ray Tracing, we could support more camera models, like fisheye lenses and rolling shutters—critical for autonomous vehicles with diverse sensors. But the challenge was speed. It wasn’t fast enough to be directly applicable.

That’s where 3D Gaussian Unscented Transform came in. It made the system as fast, while retaining the accuracy of ray tracing. It opened up practical applications for robotics and simulation.

Michael: One of the critiques of early Gaussian Splatting was that it required pinhole cameras. Extensions like 3DGUT have opened the door to robotics. We also saw USDZ conversion added recently. Why is that so important for developers?

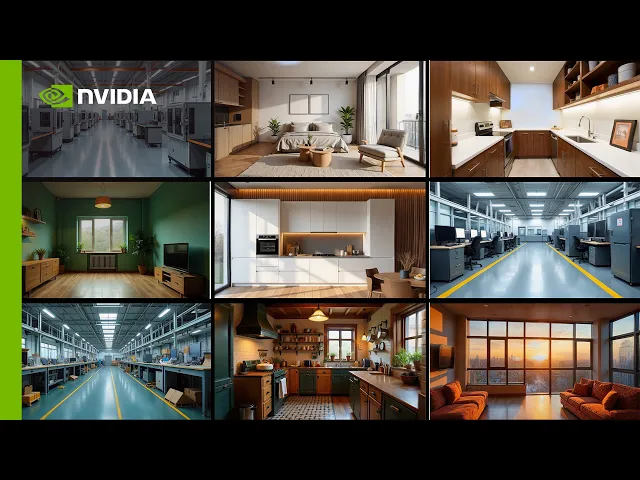

Nick: It’s key for making Gaussian Splatting truly usable in Omniverse. Now, you can train environments with your cellphone camera, record your living room, for example, and bring that into simulation.

Once you have it in USD, you can render it in Omniverse with NuRec and then layer applications on top—say, robotics with Isaac Sim. That means anyone can capture their environment and immediately test agents inside it.

On a personal level, I think of kids recording their room and then dropping an R2-D2 in it. It democratizes simulation in a powerful way.

Michael: And it’s Apache 2 licensed, right?

Nick: Yes, fully open and commercially usable. That flexibility is important. Developers can use it for robotics, but also for creative applications—games, experiences, even playful ideas like a “roller coaster tycoon” in your living room.

Michael: We’ve also seen companies like Voxel51 adopt NuRec. How are they using it?

Nick: Voxel51 provides data quality tools. Good data is essential for reconstruction—the better the input, the better the output. They’ve integrated NuRec to assess and improve input data fidelity, which makes reconstructions more robust. It’s a critical piece of the ecosystem.

Michael: Another feature I wanted to touch on is Fixer. What does that add?

Nick: Fixer addresses gaps in data. Say you trained from one lane of a road but now want to simulate driving two lanes over. The original data may not cover that.

Fixer leverages Cosmos,a model trained on millions of scenes, to fill those gaps. It transfers prior knowledge into new reconstructions, enabling novel views and higher fidelity where data was missing.

Michael: NuRec also includes nRender. Can you explain its role?

Nick: nRender is a highly optimized rendering library within NuRec. If you want to do reinforcement learning, you need millions of scene variations—and that requires extremely fast rendering. nRender accelerates Gaussian Splatting to make that feasible.

Michael: Beyond autonomous vehicles, where else do you see robotics benefiting from these representations?

Nick: Honestly, I think everything that moves should eventually be autonomous. To train those agents, you need accurate simulations of the real world across industries. Radiance field methods bring that possibility closer.

Michael: Standardization has been a big topic. With so much rapid research, when is the right time to standardize Gaussian Splatting?

Nick: That’s a tough one. Every year, new papers push the field forward—MLPs moving Gaussians in space or time, for example. That complexity makes it hard to lock down a standard too soon.

But it’s important. Industry adoption is already happening. A standard must be flexible and extensible, yet not so loose that it fails as a standard. Finding that balance will be a challenge, but it’s necessary.

Michael: I also wanted to touch on CARLA. How is NuRec being used there?

Nick: We’ve reconstructed over 80 scenarios now and contributed them to the CARLA community. Researchers can use those reconstructions to drive different routes, create novel views, and expand autonomous driving research. It’s about making roads safer by bringing real-world complexity into simulation.

Michael: That’s exciting. And NuRec also includes the Asset Harvester, right?

Nick: Yes. Asset Harvester allows you to extract assets from real-world data and build libraries. It includes a generative component that can infer unseen views, enabling novel scene composition. That’s where radiance fields combine with generative models to open entirely new workflows.

Michael: Incredible. Nick, thank you so much for joining me today. I really appreciate the conversation.

Nick: Thanks for having me.

Trending Articles

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...