So far, the vast majority of Gaussian Splatting papers have used rasterization, achieving the lightning-fast frame rates and interactivity synonymous with 3DGS. Today, things are taking a step forward with the introduction of 3D Gaussian Ray Tracing (3DGRT).

A key point is understanding the shift from rasterization, used in traditional Gaussian Splatting, to a ray tracing-based approach. For a long time, ray tracing was limited by hardware capabilities, but modern GPUs have enabled real-time rendering.

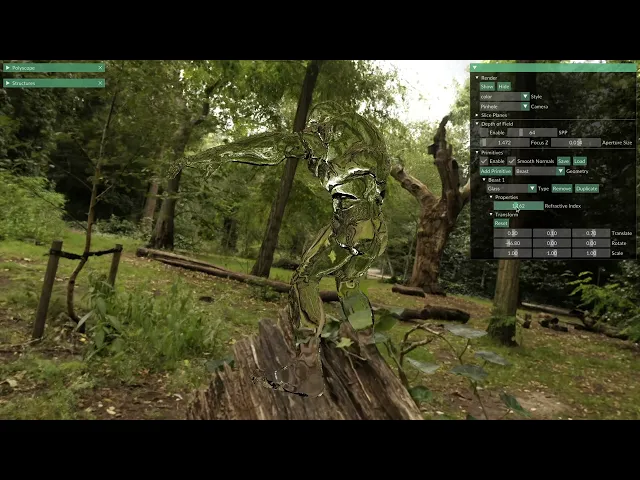

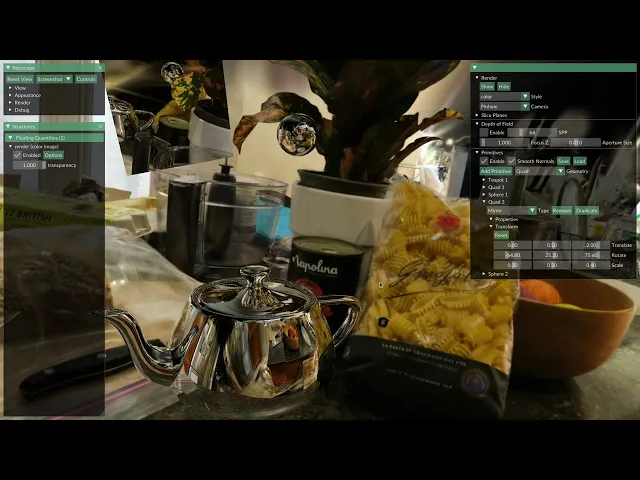

Users of Gaussian Splatting know that rasterization requires a perfect pinhole camera, making fisheye lenses off-limits. Additionally, it cannot efficiently simulate secondary rays needed for phenomena like reflection, refraction, and shadows. Some works, like RadSplat and Gaussians on the Move, created workarounds for parts of these limitations.

Enter 3DGRT, or 3D Gaussian Ray Tracing, which introduces a fast, differentiable ray tracer.

This marks a fundamental departure from Gaussian Splatting's traditional rasterization methodology, bringing several benefits. It unlocks numerous applications and secondary lighting effects, including refractions, shadows, depth of field, and mirrors. It’s important to note that this is not the final product but rather a foundation for further research. While 3DGRT shows forward-simulation of these effects, it will hopefully also serve as a foundation for further research in this direction.

3DGRT comprises two main components: a strategy to represent particles in an acceleration structure and a rendering algorithm that casts rays and gathers batches of intersections.

First, particles are first bound with an icosahedron mesh. After testing several shapes, the researchers found that a stretched icosahedron mesh provided the strongest results. These meshes tightly bound particles while leveraging fast ray-triangle intersections provided by hardware acceleration, significantly reducing false-positive intersections. The size of the bounding proxies is adaptively clamped based on the particles' opacity, reducing the number of low-contribution particles processed and enhancing efficiency.

Then a Bounding Volume Hierarchy (BVH) is built and then used to do the ray tracing.

The ray tracer employs a hits-based marching algorithm, gathering ordered intersections efficiently. This method ensures that all particles intersected by a ray are processed in a consistent order, maintaining the algorithm's differentiability. The algorithm includes a backward pass for optimization, dynamically adjusting particle parameters to improve rendering quality and efficiency.

The process repeats, tracing new rays from the last rendered particle to gather the next batch of intersections. The algorithm terminates when all particles intersecting the ray are processed or when the transmittance threshold is met, ensuring efficiency.

3DGRT takes about 50% longer to train than traditional Gaussian Splatting and renders a bit slower, ranging from 55-190 fps. The file sizes range from 300-500MB with a cap of three million Gaussians. However, just a year ago, 3DGS files were over 1GB, and today compression has reduced them to below 20MB. 3DGRT is meant to demonstrate additional lines of research. The authors note that 3DGRT is compatible with several follow-up works to 3DGS but kept the structure the same as the original 3DGS for a fair one-to-one comparison. This means these existing compression methods should be able to plug and play.

There is a potential concern about using 3DGRT in dynamic scenes because the BVH needs regular rebuilding during training, introducing a higher compute cost. One notable application discussed is autonomous driving. The proposed ray tracing algorithm's ability to handle complex camera models makes it valuable for simulating and testing autonomous driving scenarios.

There is little information about whether NVIDIA will release the code, when it will be available, or if there will be a product similar to Instant NGP. The researchers measured their results on an A6000 but did not mention the memory required for training. However, it appears that a promising new research line of Radiance Fields has emerged, and I am excited to see the continual progress. Their project page is full of incredible videos and more information, and I highly suggest visiting it.