Michael Rubloff

Apr 26, 2024

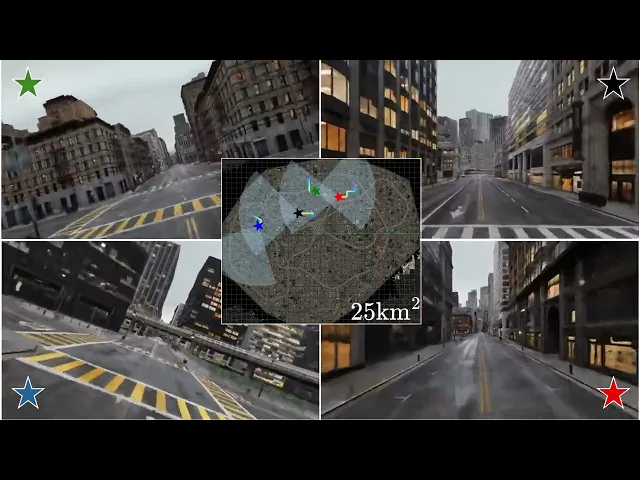

If you think you've seen a large-scale NeRF before, have you seen a 25km capture, spread across 260,000 images? No one would fault you for questioning the scaling ability of NeRFs to this scale. Clearly, this isn't something that can fit on a 4090, but how can NeRFs scale across multiple GPUs to meet these demands?

Some of the exciting large scale radiance field methods this year, such as VastGaussian, GF NeRF, and City Gaussian all tackle massive captures, but NeRF-XL showcases how they can scale jointly across GPUs.

NeRF-XL starts by strategically partitioning the large-scale scene into distinct, non-overlapping segments. This ensures that the computations performed by each GPU are unique and essential, fully eliminating overlapping artifacts and unnecessary memory usage of the GPU. Moreover, this setup streamlines the integration of the results from all GPUs, using an optimized global gather operation that only transfers essential information required for the final image rendering.

NeRF-XL revisits the traditional volume rendering techniques used in NeRFs. By modifying the volume rendering equation, NeRF-XL reduces the need for extensive inter-GPU communication while still facilitating joint training, where each GPU processes distinct segments of the scene but collaboratively contributes to a unified model. This ensures that while computations are localized, the learning process remains interconnected across the GPU network, enhancing both efficiency and coherence in the final 3D reconstruction.

To ensure efficiency and balance in GPU workload, NeRF-XL incorporates sophisticated spatial partitioning strategies. These strategies dynamically adjust the distribution of scene data across GPUs based on complexity and content, ensuring that each GPU's computational load is balanced. When something like Structure from Motion (SfM) is used, this means that the number of points is evenly distributed, whereas, when that data is not available, they discretize randomly sampled training rays into 3D samples.

It shouldn't be a surprise to anyone that they're using Instant NGP as the baseline. Not only is it NVIDIA's in house methodology, but it has proved time and time again to be a key building block for other adaptations.

Roughly a month and half ago one of the Solutions Architect at NVIDIA, Brent Bartlett, posted on his Linkedin a NeRF of a hurricane aftermath. While at the time there was no straightforward explanation to what method was utilized, NeRF-XL’s usage seems to be confirmed.

This brings up a use case that has not been fully explored yet, but is one that has large potential. Remember that NeRFs are able to leverage semantics. So for instance, shortly after a natural disaster, large scale radiance fields can be created and then utilize additional AI to prioritize areas in greatest need in moments where expended time directly correlates to potential lives being saved.

This thought-line transgresses from the original paper however. The code for NeRF-XL has no release date as of right now, but you can imagine the excitement that is being felt across both the research, creator, and consumer world. While the paper helps demonstrate the large scale scenes that will benefit from this scaling, it shouldn't be ignored that this also benefits smaller captures too. The larger takeaway is that GPU usage can be stacked and leveraged as necessary to achieve NeRF demands.

You can find out more information about NeRF-XL from their project page.

Trending Articles

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...