One of the interesting problems in 3D graphics is how to effectively add, remove, or alter elements within a scene while maintaining a lifelike appearance. We've seen advancements with several innovative papers addressing these issues, employing diffusion-based models to enhance scene realism and consistency.

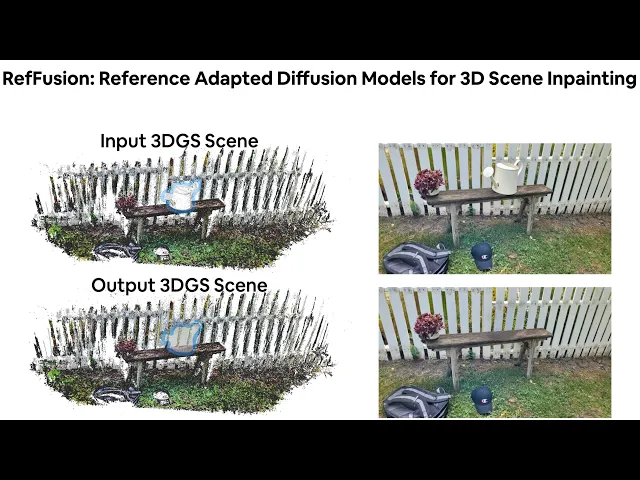

A notable example is Google's ReconFusion, which utilizes NeRFs as its foundation. NVIDIA's recently announced RefFusion, however, takes a different approach by employing Gaussian Splatting.

Unlike systems that rely on text prompts for generating 2D references, RefFusion personalizes the process using one or multiple reference images specific to the target scene. These images not only guide the visual outcome but also adapt the diffusion model to replicate the desired scene attributes accurately.

RefFusion's process begins with the selection of a reference image—essentially what the user wants the scene to ultimately resemble. This image can either be generated or supplemented with additional real images that depict the desired output. RefFusion's inputs are a reference image, masks of the desired manipulation, and training views (contributed by Gaussian Splatting).

This method effectively tailors the generative process to specific scenes, significantly improving the base upon which RefFusion operates. By eliminating reliance on text guidance during the distillation phase, it empowers RefFusion use of multi-scale score distillation, which caters to both the larger global context of a scene, in addition to local, smaller details too.

The system uses the original dataset from the Gaussian Splatting scene to create a mask that identifies which parts of the scene require inpainting. This is determined by measuring each part's contribution to volume rendering within the scene. Parts that meet or exceed a certain threshold are included in the masking group.

In areas identified by the mask, RefFusion leverages information from the reference images to dictate how these regions should be filled. The system's adaptation of the diffusion model to the reference views ensures that the inpainted content matches the existing or desired scene elements with high fidelity. Moreover, depth prediction from monocular depth estimators is utilized to align the 3D information with the 2D inpainted results, further enhancing the scene's depth accuracy and visual coherence. After they finish labeling all the parts, they are rasterized them back into training views.

To blend the scene more seamlessly, RefFusion includes a final adversarial step, where a discriminator is used to mitigate color mismatches and artifacts. This step draws inspiration from GaNeRF, particularly in the hyper parameters used for the adversarial loss.

All of these processes lead to outputs that not only achieve high-quality inpainting results but also allow for capabilities like outpainting and object insertion.

Interestingly, there has been an uptick in generative Radiance Field methods recently. Today, another interesting development was announced with InFusion, indicating that in-painted Radiance Fields using diffusion are on the rise and serve as a legitimate way to fill in missing information or manipulate a scene effectively.