Apr 4, 2023

What is Virtual Production

Virtual production is a filmmaking technique that uses digital technology to seamlessly integrate virtual environments with live-action footage. It allows filmmakers to create complex scenes, characters, and sets in a digital space and manipulate them in real-time.

Traditionally, filmmakers have used previsualization techniques like storyboarding and animatics to plan out shots and sequences. However, virtual production takes this process to the next level by providing a more immersive and interactive experience, allowing for on-the-spot creative decisions. One of the most popular virtual production techniques is In-Camera VFX (ICVFX), which will be the focus of this article.

In traditional filmmaking, visual effects (VFX) are performed in post-production, which can create time and budget constraints for VFX artists and limit creative decision-making during production. The industry adage "fix it in pre, not in post" emphasizes the importance of addressing issues and making decisions during pre-production and production. This is why ICVFX has grown in popularity in recent years.

ICVFX is a technology that enables filmmakers to create and adjust visual effects in real-time during production. It allows for better integration of practical and digital elements, more accurate creative decision-making, and potentially reduces post-production time and costs. This technique works by using Unreal Engine powered rear projection and LED walls to project a virtual environment onto a set, creating a realistic backdrop for actors and props. The key advantage of this technique is that all physical elements on the set have coherent lighting with the virtual environment, allowing stakeholders, especially actors, directors, and directors of photography, to be aligned around the scene's location.

How are Virtual Production Environments Currently Made

In-Camera VFX requires capturing the virtual environment alongside the physical elements of the scene at shooting time. This presents a significant challenge that can be solved in different ways depending on the requirements, available time, and budget. The different methods to project a virtual environment on an LED wall typically pose a trade-off between the time and budget needed to create them and the final amount of photorealism and camera movement allowed by the technique. We can summarize the existing methods into four different categories:

360-degree images: Capturing a 360-degree image of a location is a fast and cost-effective method of generating a virtual environment. However, this approach does not allow for camera movement or changes in the viewpoint of a scene.

Projection mapping onto a 3D mesh: Another alternative to create a virtual environment is to capture a simple image and project it onto a 3D mesh, creating a "2.5D" effect. This method allows for a limited range of camera movement but requires manual effort to create the proxy mesh.

Photogrammetry: Directly capturing a scene using photogrammetry offers complete freedom in the virtual environment. However, this method requires significant effort from the artists to make it look photorealistic and can be expensive.

3D modeling: Creating a virtual environment through 3D modeling is the most obvious and versatile approach. However, this method is often too time-consuming and costly for most productions.

As can be seen, there is a significant disparity in terms of effort and budget between these different approaches. While photogrammetry and 3D modeling offer the most freedom and photorealistic results, they are often not feasible for most productions due to cost and time constraints. Using a 360-degree image or projecting an image onto a 3D mesh does not allow for extensive camera movement, limiting the advantages of virtual production.

Wouldn't it be great if there were a technique that allows for the effortless, fast, and cost-effective creation of environments while allowing free camera movement? That is where NeRF comes into the scene.

NeRFs for Virtual Production

Neural Radiance Fields, or "NeRF," is a machine learning technique that enables the creation of 3D models of real-world objects and scenes. This is achieved by training a neural network on a set of 2D images. Once trained, the network can generate photorealistic and accurate new views of the object or scene from any angle. Since the first paper on NeRF by Mildenhall et al. was published in 2020, there has been a surge of research aimed at improving different aspects of NeRF, such as training and rendering speed. Although research is ongoing, NeRF has already been proposed as a solution for many problems in different segments, such as video games, simulations, and film.

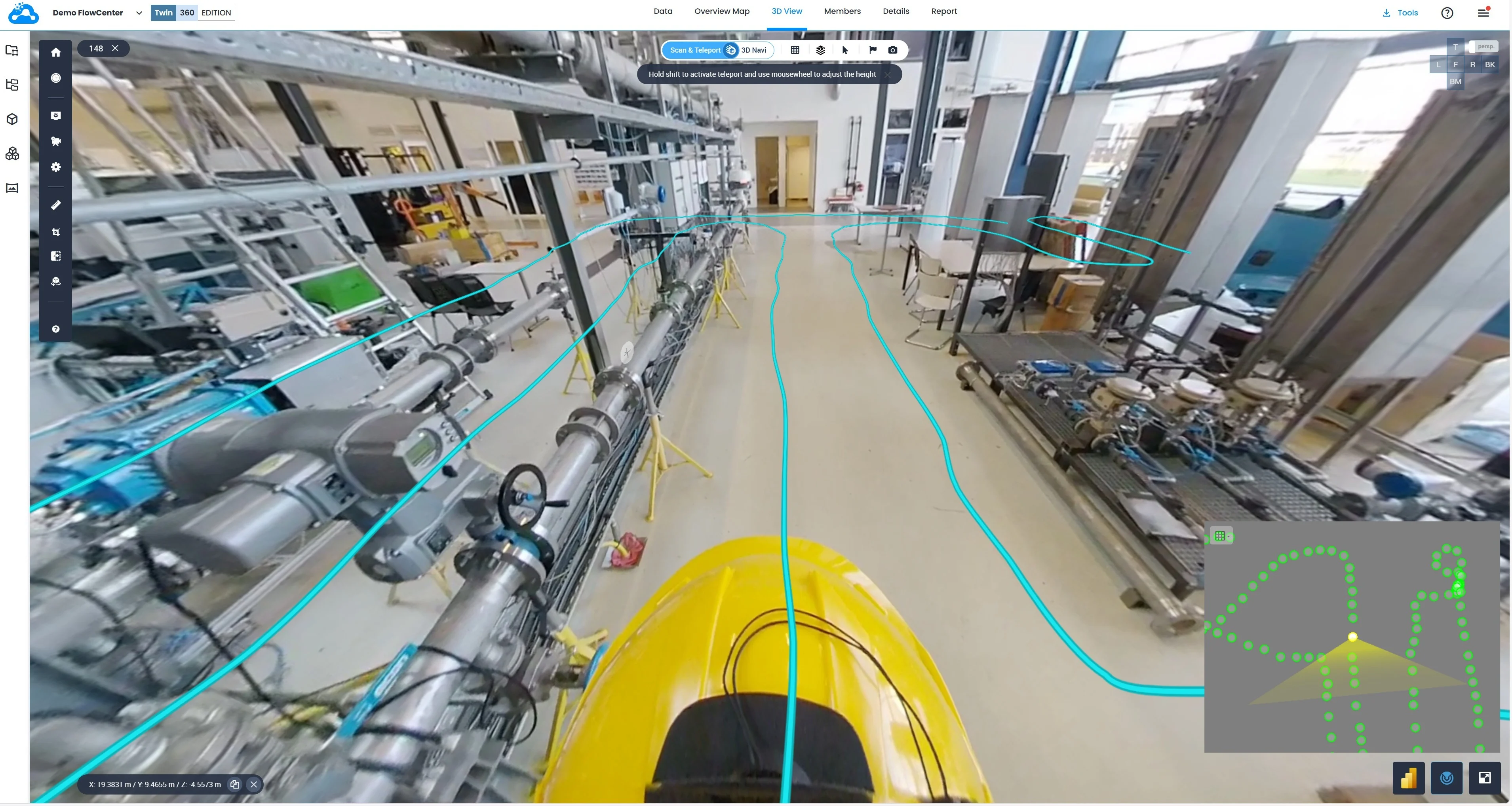

NeRF has quickly been recognized as a new potential way of capturing environments for virtual production. By taking as few as 50-300 pictures of the environment and training a neural network (which currently takes between 30 minutes and an hour), we can move the camera freely through the environment and generate photorealistic images.

NeRF's applications could represent the next step in ICVFX. By drastically reducing the costs and time required to create a volumetric virtual environment, low-budget productions could create higher-quality content. Additionally, several locations could be brought to a virtual stage each day, accelerating filming speed and allowing artists to select from a wider variety of environments to enhance their creativity. Stories would no longer be limited by the number of locations, and we could travel anywhere!

NeRF could also be used for different parts of the production pipeline, such as location scouting or previsualization. For example, a director could use NeRF to preview how a given camera movement would look and select the best-suited movement before shooting, avoiding the need to repeat the same shot with different camera movements.

However, there are two main drawbacks to using NeRF in virtual production: real-time rendering and integration within Unreal Engine.

How does Volinga Suite Enhance Production Pipelines

Earlier this year, Volinga announced the launch of the Volinga Suite, the first platform that seamlessly creates and renders NeRFs in Unreal Engine. Our aim is to overcome the obstacles posed by the inability to render NeRFs in real-time and the lack of integration within game engines. Our solution caters to the different use cases that were previously blocked by these limitations, and this has resonated with various communities, including the virtual production community.

Over the last two months, we collaborated with different virtual production partners to ensure that the Volinga Suite meets the needs of studios by providing the missing piece in their workflows. We are thrilled to announce that we have achieved this goal.

With our .NVOL file format powering the platform, the Volinga Suite now offers a straightforward pipeline for environment capture (using Volinga Creator) and rendering (using Volinga Renderer) in virtual stages. Our plugin is compatible with the most widely used solutions for virtual production in Unreal Engine, such as Disguise RenderStream or Pixotope. Our partners from Studio Lab have even demonstrated the use of different NeRFs as virtual environments in this video.

Studio Lab tests using different NeRFs as virtual environments.

We believe that the Volinga Suite will democratize the use of virtual production by making true 3D more accessible to any studio. It will also enhance existing production pipelines by making them faster and more efficient and removing the existing limitations for artists' creativity.

Creative experiments using NeRF hallucinations at Studio Lab virtual stage

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...