The world of research has been particularly exciting with the rise of volumetric scene representations of Radiance Field methods, such as Neural Radiance Fields (NeRFs) and Gaussian Splatting (3DGS). These techniques have made it easier to reconstruct highly detailed environments, from whatever the original camera captures.

However, when it comes to inverse rendering—reconstructing not only hyper-real visuals, but also materials, geometry, and lighting from a set of images— there's still some work to be done. There are ways for sure, but an age old trade off emerges, balancing accuracy with computational efficiency.

Traditionally, rendering methods rely on recursive path-tracing techniques to simulate the interaction of light in a scene. While highly accurate, these methods are computationally expensive. To reduce this overhead, recent approaches use radiance caches, which store light data to simplify calculations. You might even remember seeing a paper from Instant NGP author Thomas Mueller that tackles this. The catch? Many of these methods introduce bias, leading to inaccuracies in the final image and the material properties that get reconstructed. This bias affects not only the visuals but also the gradient-based optimization process, which is crucial in improving the rendered scenes.

Flash Cache, a new method from Google and CMU, tackles these challenges by reducing bias through approximating the volume rendering integral and using Monte Carlo sampling to evaluate the rendering integral. This translates into a solution that both eliminates bias and remains computationally efficient. By combining a NeRF based radiance cache with occlusion-aware importance sampling and a cache-based control variate—this approach brings new accuracy to radiance-cache-based rendering.

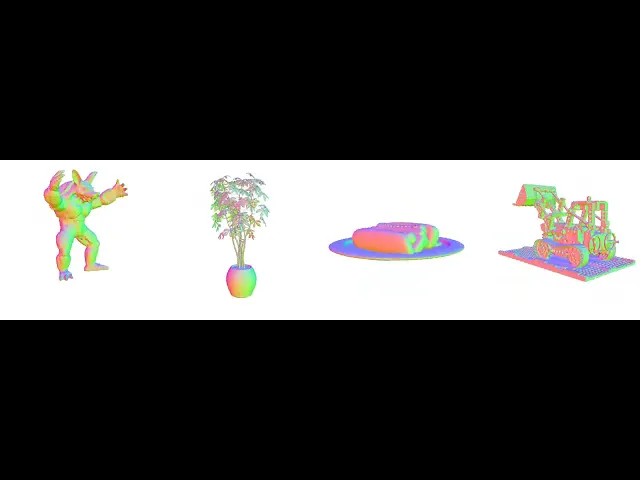

The result is significantly improved inverse rendering capabilities, particularly in scenes with complex lighting effects, like specular reflections and challenging light transport scenarios. Unlike many existing approaches that use a single fixed intersected surface per ray or low-capacity and blurry representations of incoming radiance, Flash Cache sidesteps these limitations by employing volumetric representations, offering higher quality and more accurate material reconstruction.

The main issue with many existing techniques lies in how they approximate light transport. To speed up rendering, methods either cut corners by simplifying the geometry or use low-capacity models that struggle to capture fine details, especially in scenes with intricate lighting. These shortcuts introduce bias, which, while making the rendering faster, sacrifices realism.

Flash Cache sidesteps these pitfalls by introducing occlusion-aware importance sampling. The concept is relatively simple yet highly effective: instead of blindly sampling light from all directions, the system samples light based on both the distance and intensity of the incident light, and whether a light source is blocked by an object in the scene. This leads to more accurate lighting estimates and cuts down on unnecessary computation. It’s like a photographer knowing where to place a light for the best shot, rather than wasting energy lighting up areas that won’t be visible in the final image.

But that’s only half the story. Rendering high-quality light transport effects, especially for scenes with specular surfaces or reflections, requires more than just smart sampling. The other innovation here is the fast cache architecture. This fast cache acts as a control variate, reducing the variance in lighting calculations while ensuring that the system remains computationally efficient. It’s essentially a balancing act: the fast cache provides a rough estimate of the incoming light, while a more expensive, high-quality cache corrects any errors. The result is unbiased, high-quality rendering without the computational cost of fully recursive methods.

It should be noted that this doesn’t appear to be in the hands of consumers, quite yet, with the training taking place across a single A100, but the authors note that there remains plenty of available optimizations that will enable consumers to run. Many of the Flash Cache researchers also worked on NeRF-Casting, so perhaps these methods will be bundled into an updated version of Zip-NeRF, or, in an ideal world, integrated into platforms like Nerfstudio.

For information, check out their Project Page and stay tuned for a code release!