There are a tremendous number of applications where relighting will greatly aid the use of NeRFs in practical applications. But the accurate rendering of objects under varying lighting conditions has been a challenge. This is where IllumiNeRF comes in.

Traditional methods have approached the problem from inverse rendering, to decompose an object's geometry, materials, and illumination to reconstruct a 3D representation. IllumiNeRF offers a different tactic by using a generative approach, specifically a 2D Relighting Diffusion Model, to produce high-quality relit images, which are then used to train a NeRF for novel view synthesis.

The process begins with the preparation of input data, consisting of a set of images of an object captured from different viewpoints, along with the corresponding camera poses. Additionally, the target lighting conditions under which the 3D model will be relit are specified. This initial step sets the stage for the subsequent phases of geometry estimation and relighting.

Before relighting, IllumiNeRF requires an accurate 3D geometry of the object. This is achieved by training an initial NeRF using the input images and poses. The NeRF gives information about the object's geometry by learning from the provided data. Once trained, this model yields a detailed 3D geometry of the object, which is crucial for rendering radiance cues in the next phase.

The central innovation of IllumiNeRF lies in its use of a 2D Relighting Diffusion Model to relight each input image under the desired target illumination. To facilitate this, radiance cues are generated. These cues are pre-rendered images that provide essential information about the effects of specularities, shadows, and global illumination on the object's surface. Using a shading model, the object's estimated geometry is rendered under the target lighting conditions to create these cues.

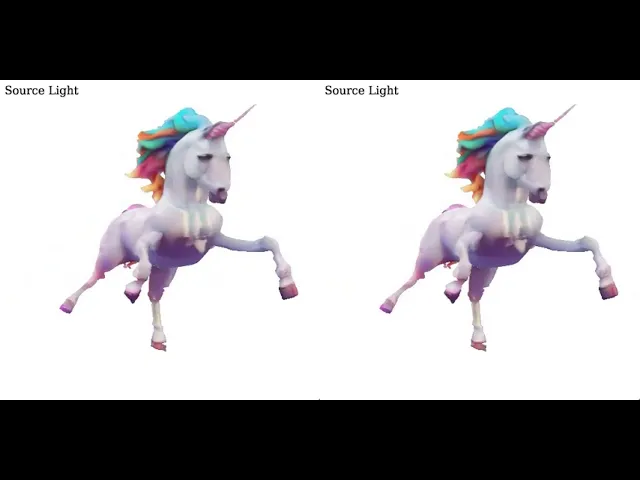

With the radiance cues in hand, the trained diffusion model is used to relight each input image according to the target illumination. This model generates multiple plausible relit images for each viewpoint, offering diverse yet realistic representations of how the object would appear under different lighting conditions. These relit images are foundational for constructing a consistent 3D representation using a Latent NeRF model. In both the initial NeRF and the Latent NeRF, they're using UniSDF, which you might remember as having two radiance fields that decouple geometry from appearance.

The Latent NeRF model integrates the variations across the relit images to create a unified 3D structure. Each relit image is associated with a latent code representing different plausible explanations of the object's material properties and geometry. The Latent NeRF model is then optimized using the set of relit images. This optimization process focuses on minimizing the reconstruction error between the relit images and the NeRF-rendered views, ensuring a consistent and accurate 3D representation.

Once the Latent NeRF model is trained, it can render the object from novel viewpoints under the new lighting conditions, enabling dynamic view synthesis. For each new viewpoint, latent codes are sampled to condition the NeRF model appropriately. The NeRF model then renders the object from these new viewpoints, ensuring that the appearance is consistent with the target illumination. This step leverages the latent codes and the target lighting conditions to produce high-quality images.

The 2D Relighting Diffusion Model is built on a latent image diffusion model similar to StableDiffusion and is fine-tuned using a ControlNet approach to condition on radiance cues.

It's exciting to see a new approach to NeRF-based relighting and the promise it represents across industries. However, it does seem like it will be a little while before IllumiNeRF is optimized enough for consumer usage.

For more details and to explore IllumiNeRF, visit their project page.