When I first started creating with NeRFs, one of my biggest pain points was pushing a dataset through COLMAP, only to get a CUDA out of memory error. It bothered me so much that I actually created two calculators to help me never go over again.

In roughly May or June of 2022, the NVIDIA team released an update that allowed me to process three times as many images on my 3080. I was so excited!

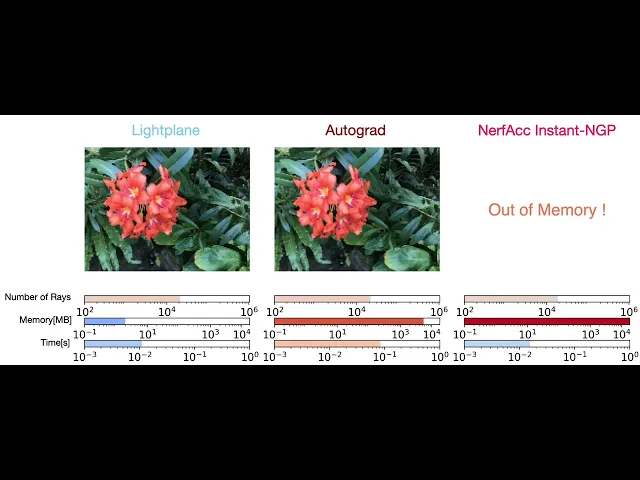

Since then, we haven't seen many increases on the computation efficiency and memory impact of radiance fields. That's why I was thrilled to see Lightplane last night from the University of Michigan and Meta, in which they have greatly increased available memory capacity.

There are two main components that power Lightplane: the Lightplane Renderer and Splatter. You might think the former deals with NeRFs and the latter with Gaussian Splatting, but this squarely focuses on NeRFs because they employ volumetric rendering, whereas Gaussian Splatting utilizes rasterization.

The Lightplane Renderer tackles the memory bottleneck by reconfiguring the computation of rendering operations across 3D neural fields. Instead of storing intermediate values for every point evaluated along a ray (a common necessity in traditional volumetric rendering like NeRF), it processes and updates data sequentially along the ray's path, thereby minimizing memory storage.

As the renderer processes each point along a ray, it calculates the necessary features (e.g., color, density) and immediately updates the image pixel and transmittance values. This means only the current processing point's data is held in memory at any time. By recalculating intermediate values only when needed for backpropagation and not storing them, the Lightplane Renderer drastically cuts down the memory requirements typically needed for gradient computation.

The method employs a strategic use of GPU cache to temporarily hold essential data during computation, further optimizing memory use without compromising processing speed.

Conversely, Splatter focuses on the process of lifting 2D image data into a 3D space, a critical step for reconstructing 3D models from 2D inputs. It optimizes this process by reversing the typical data flow seen in rendering, pushing 2D information directly into the 3D structure rather than pulling 3D data from 2D inputs.

Splatter projects features from 2D images directly into the 3D model’s data structure. Each pixel's features are mapped onto the 3D-structure cell of the corresponding point along a ray, thereby bypassing the need to store large volumes of intermediate 3D data.

By inverting the standard rendering logic, Splatter manages to reduce memory load significantly. This inverse approach also simplifies the computational complexity of mapping features to 3D points. The component uses a hashing scheme to efficiently aggregate and store 2D data into 3D structures, ensuring that memory usage is minimized even when processing large arrays of input images.

The efficiency numbers for training they're getting are crazy, reducing orders of magnitude and allowing people to potentially begin training radically larger datasets, with significantly less GPUs. To be blunt, this could mean that GPUs with even the smallest amount of VRAM can work. Paired with some of NVIDIA's most recent NeRF-XL, which can distribute training jointly across scenes, you can imagine what could be coming.

Excitingly the code has already been released with a BSD 3.0 License, allowing for permissible use and incorporation within projects. Check out more from their project page, here!

In addition, they have also created quite robust documentation to accompany the code. I'm very excited to begin to see it be implemented across existing platforms. Hopefully this means my calculators will need to be revisited!