It's a very exciting day! This is the first formal release for nerfstudio since mid September and comes with a ton of updates. Most people will probably point to the formal release of nerfstudio's Gaussian Splatting implementation.

Splatfacto is the formal release from the soft launching of Gaussian Splatting late last year and is built on the GSplat implementation. Gsplat is an completely in-house built re-implementation of the original Inria algorithm. This means you are able to use nerfstudio's gaussian splatting implementation commercially, as long as you follow nerfstudio's Apache 2.0 license.

As of right now, if you want to export a .ply for your gaussian splat, you will need to have trained a splat prior using their Splatfacto.

However, just like nerfacto, nerfacto big, and nerfacto huge, there are varying ways of dictating Gaussian Splatting's reconstruction quality within nerfstudio. The nerfstudio team enables this through a restriction on the culling phase of alphas (transparency value) in gaussian splatting. They provide an example code of how to do this:

ns-train splatfacto --pipeline.model.cull_alpha_thresh=0.005 --pipeline.model.continue_cull_post_densification=False --data <path to your data location>

Splatfacto does not support exporting a .ply from a NeRF trained on nerfacto. You will only be able to export splats from trained splats. It should be noted though that the first step of using the ns-process command to generate structure from motion, (SfM) can be used to train any of the nerfacto methods or gaussian splatting.

Once you have your capture exported, it will work with the several viewers that exist, including PlayCanvas's SuperSplat, Mark Kellogg's GaussianSplats3D, Spline, Polycam, and Antimatter's.

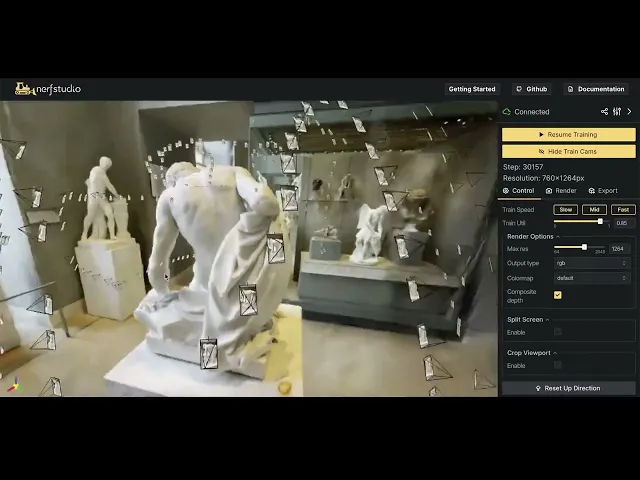

Another major development is the formal unveiling on the new nerfstudio viewer, viser, located at https://viewer.nerf.studio. The new viewer, made by Brent Yi comes with a ton of truly exciting features, including the share button that Professor Kanazawa teased last year at her Stanford HAI Lecture. Yes, that's right. You can now share your captures from nerfstudio!

Python API for visualizing 3D primitives in a web browser.

Python-configurable GUI elements: buttons, checkboxes, text inputs, sliders, dropdowns, and more.

The backend can also be used to build custom web applications. It supports:

Websocket / HTTP server management, on a shared port.

Asynchronous server/client communication infrastructure.

Client state persistence logic.

Typed serialization; synchronization between Python dataclass and TypeScript interfaces.

Back at SIGGRAPH, the nerfstudio team mentioned to me that they had begun work on it, and it is so exciting to see it finally come to fruition! There have been quite a few papers that have leveraged the viser viewer over the last couple of months, including: GARFIELD (We will be covering shortly), GaussianEditor, and F3RM.

It should also be noted that Meta Reality Labs makes a few appearances too. nerfstudio now supports the Eyeful Tower dataset from VR-NeRF. We just recently spoke to their team about their IMAX quality NeRFs that run in VR. I didn't recognize Project Aria at first, but it is now supported too. This development, I believe, may have larger implications than anyone realizes at the current moment. Having a pipeline from glasses through to nerfstudio unlocks a tremendous amount of interesting opportunities and I will be paying very, very close attention to how this progresses.

Also making an appearance in the 1.0 release is Py-NeRF, which we looked at late last year!

This release has been a long time coming and it is always very exciting to see new developments out of the Berkeley based team. Luma AI continues to help sponsor nerfstudio's development. To see the full list of changes, please take a look at the nerfstudio changelog.