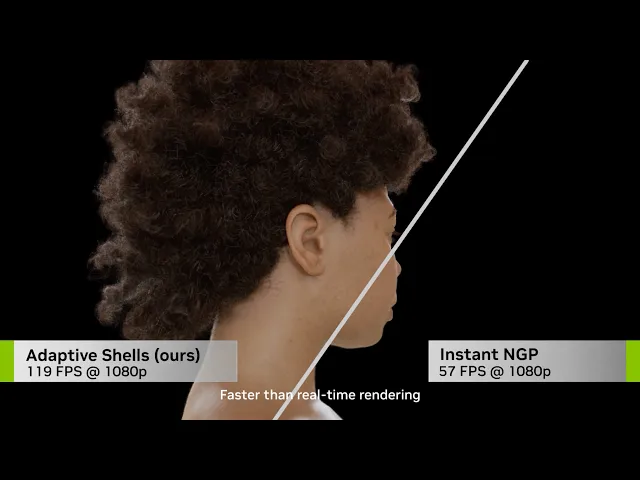

When Instant-NGP was released, it was seen as the moment when the floodgates opened for NeRFs to be created in a fraction of the time it previously took. Today, NVIDIA has released a new paper called Adaptive Shells for Efficient Neural Radiance Field Rendering that speeds up inference time up by an additional ten times, while increasing the fidelity of the NeRFs.

So how do they do it? And what exactly did they change to get the speed boost?

This challenge stems from the volumetric nature of traditional NeRFs, which, while adept at capturing intricate details like the fine strands of hair or the delicate structure of foliage, is less efficient for scenes dominated by solid surfaces.

You can really see how effective the Adaptive Shells are in the above video from :13-23 seconds. The detail in her hair is astounding, while still managing to double the frame rate achieved.

In most scenes, these smaller and intricate details, such as hair are not found as often. So, why does the entire scene need to be treated the same? This was one of the core questions the team seems to be answering and it's an important question to ask. As the NVIDIA team realized, it makes sense to have specific rendering techniques depending on the surface. For instance, the more intricate surfaces, such as fuzz and strands of hair become stronger from exhaustive volume rendering. On the other hand, a smooth surface— say a white wall, would be better to only have a single ray sample taken.

Recognizing this inefficiency, researchers have embarked on a quest to refine the NeRF technology, leading to the development of an innovative hybrid approach that seamlessly integrates volumetric and surface-based rendering. Adaptive Shells is not just a step forward in accelerating the rendering process but also marks an improvement in the overall visual fidelity. Essentially they allocate their resources a bit more intelligently, spending where it's necessary and simplifying where it isn't.

What they're doing makes a lot of sense, but it also introduces some complexities that they needed to solve for.

"First, the memory footprint of grid-based acceleration structures scales poorly with resolution. Second, the smooth inductive bias of MLPs hinders learning a sharp impulse or step function for volume density, and even if such an impulse was learned it would be difficult to sample it efficiently. Finally, due to the lack of constraints, the implicit volume density field fails to accurately represent the underlying surfaces [Wang et al. 2021], which often limits their application in downstream tasks that rely on mesh extraction." Adaptive Shells for Efficient Neural Radiance Field RenderingTweet

These aren't necessarily small challenges and thus, the NVIDIA team propose a new volumetric NeRF method altogether.

The crux of this method lies in its ability to intelligently differentiate between parts of a scene that need detailed volumetric representation and those that can be efficiently rendered as solid surfaces. This is achieved through an "adaptive mesh envelope," a novel concept that acts as a spatial boundary for the neural volumetric representation.

This locally learned kernel dynamically adjusts to the nature of the scene's surfaces. In areas with complex volumetric features like hair, the kernel size widens, allowing for a detailed representation. Conversely, in regions with solid surfaces, the kernel size tightens, enabling efficient rendering with minimal samples. In simpler terms, in areas where the scene primarily consists of solid surfaces, the mesh envelope simplifies the rendering process, requiring fewer samples and thus reducing the computational load significantly. Interestingly, this is all built on NeuS and uses a SDF to adapt the representation to the local complexity of the scene, which accelerates rendering.

For those expecting the same GUI as Instant-NGP, you'll be disappointed until you take a look at the refined and interactive menu.

An adaptive shell, defined by two explicit triangle meshes, marks the space contributing significantly to the rendered appearance. The thickness of this shell varies with the kernel size: a large kernel size results in a thick shell for volumetric content, while a small kernel size leads to a thin shell, suitable for surfaces.

To extract this shell, the fields of the kernel size and SDF are first sampled on a grid. The magnitude of the ratio of SDF to kernel size influences the rendering contribution along a ray. The method involves applying level set evolution to the SDF, creating eroded and dilated fields which are then extracted as inner and outer shell boundaries using the marching cubes technique. The outer surface is kept smooth to avoid artifacts, while the inner surface excludes regions not contributing to the rendered appearance. This process involves a series of steps including forward-Euler integration and clamping the results to ensure the appropriate growth or shrinkage of the level set.

The extracted adaptive shell acts as an auxiliary structure to optimize the sampling of points along a ray. This approach efficiently skips empty spaces and concentrates sampling where necessary for high perceptual quality. Ray tracing is used to define intervals along the ray that intersect with the shell, and samples are then taken within these intervals.

One of the most exciting applications of this technology is its utility in game development and animation. The adaptive shells can be converted into a tetrahedral cage, seamlessly integrating real-life elements into game engines and simulations. Remarkably, this method achieves frame rates of up to 40fps in large outdoor scenes and 300 fps in object captures on a 4090 GPU.

https://twitter.com/zianwang97/status/1725300891421462926

Contributions from renowned experts like Thomas Müller, a key figure behind NVIDIA's Instant-NGP, and Sanja Fidler, head of Toronto's AI Lab, further underscore the significance of this work. Müller is also recently coming off his second year in a row, having Time Magazine naming one of his papers as a best invention of the year. While NVIDIA has not yet confirmed public release plans, the anticipation is high, especially given the upcoming presentation at SIGGRAPH Asia.

This innovation not only stands as a testament to NVIDIA's leading role in the radiance field boom but also opens doors for creators and developers to harness these advanced methods. As we await further developments, NVIDIA's approach to NeRFs continues to push the boundaries of 3D rendering, blending efficiency with unmatched fidelity.