Michael Rubloff

Sep 11, 2024

It’s something we’ve covered several times before: the rendering rate of NeRFs is fundamentally slower than Gaussian Splatting right now. But each time has come with the caveat that this is not a hard maxim, and in fact, we have seen examples that show otherwise.

One of those examples was the NVIDIA paper and SIGGRAPH Asia 2023 Best Paper winner, Adaptive Shells. Adaptive Shells showed NeRFs running at 120 fps, but as it turns out, there was a contemporary paper submitted at the same time that achieves similar results with a different approach.

That paper is Quadrature Fields, and it’s what we’re looking at today. You might recognize some of the authors here, such as Gopal Sharma and Daniel Rebain for the paper 3DGS-MCMC, which garnered a lot of attention for increasing 3DGS reconstruction fidelity.

NeRF relies on volumetric rendering, which requires evaluating a large neural network at hundreds of points along each ray. This leads to high computational demands, making real-time applications like interactive 3D visualization or video games nearly impossible without top-tier hardware. Though several approaches like MobileNeRF, BakedSDF etc have been proposed to alleviate this problem by approximating scenes with textured meshes, essentially reducing the volumetric rendering in NeRFs to rasterization of solid surfaces. However, these approaches face challenges in handling transparency or complex materials such as glass or fur, which will require evaluating several points along the ray.

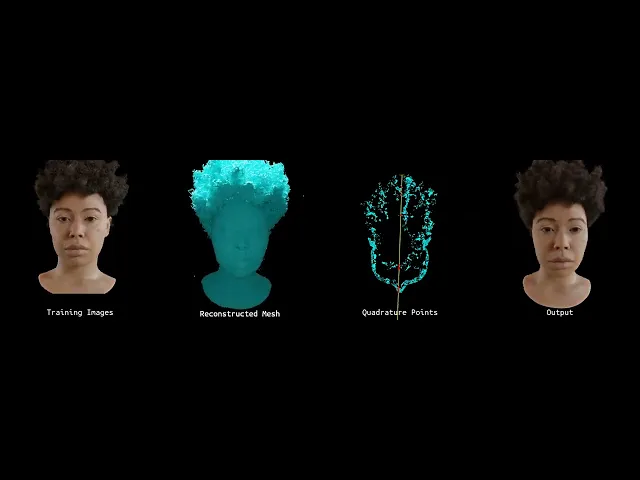

To address these challenges, the Quadrature Fields introduces a more efficient representation consisting of “layers” of textured mesh. The process begins by training a NeRF model on NVIDIA’s Instant NGP and Ruilong Li’s Nerfacc. The researchers then train a quadrature field—a neural field trained by optimizing the gradients of the field such that quadrature points get aligned with the scene's volumetric content.

Next, the Marching Cubes algorithm is applied to the quadrature field, producing a polygonal mesh that represents the scene. This mesh enables efficient rendering, leveraging modern graphics hardware to speed up the process. To further optimize the system, a fine-tuning process adjusts the mesh vertices using photometric reconstruction losses. This step refines the placement of quadrature points, ensuring greater accuracy in areas with complex geometry while reducing the number of points needed for simpler surfaces.

After fine-tuning, the neural features (such as color, opacity, and view-dependent effects) are baked into the mesh. These features are compressed into texture maps, making the representation more compact and efficient for real-time rendering. This baking process allows the final output to be rendered using frameworks like Nvidia’s OptiX ray-tracing library, achieving fast, high-quality results. In the case of solid objects, the system creates a single intersection point along the ray, mimicking traditional surface rendering. For transparent materials, the method finds multiple quadrature points along the ray to handle complex transparency effects. This allows the system to accurately represent volumetric effects in materials like glass, furs etc.

The method achieves rendering speeds of over 100 frames per second for full HD (1920×1080) images, and in some cases—especially with synthetic data—frame rates can reach as high as 500 fps. This performance is significant, making real-time, high-quality volumetric rendering accessible for various applications, including interactive 3D visualization and gaming.

Additionally, the system's VRAM consumption remains reasonable, with no more than 9GB used in the examples provided, making it accessible for users with mid-tier NVIDIA GPUs. The resulting files range from 330MB to 4GB, depending on the complexity of the scene and the need to store neural features and compressed textures.

By combining modern graphics hardware, ray tracing, and novel techniques to extract layers of meshes using the quadrature field, this method offers a significant improvement in NeRF rendering speeds. Achieving real-time, high-quality volumetric rendering paves the way for more immersive and interactive experiences across multiple industries. This research shows there is still room for large-scale improvements in radiance fields, potentially revolutionizing how we approach real-time 3D rendering.

The code is already available here, with an MIT License. They will be presenting at ECCV and their project page with more information can be found here.

Trending Articles

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...