Since the unveiling of the Sora's large-scale generative Radiance Fields, the tech world has been buzzing with anticipation about the future of 3D scene generation. There hasn't been much public work since then showcasing what could be coming, but today we're looking at RealmDreamer, which creates scene level generations based on original text prompts.

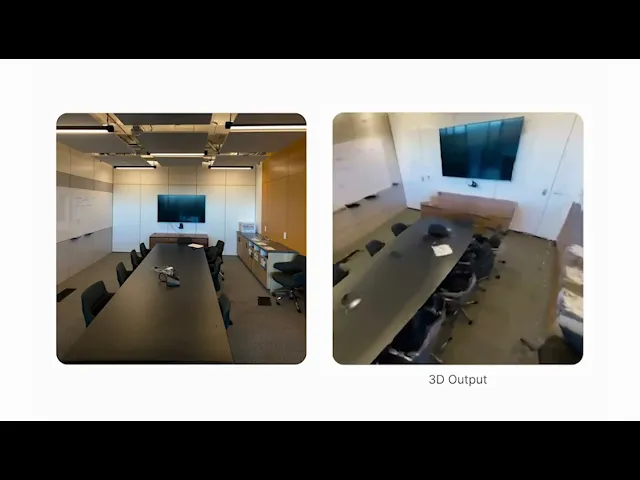

You have to imagine that they're using some kind of radiance field method and they are. They use 3D Gaussian Splatting, but the process begins with the generation of a reference image from a text prompt, followed by the use of a monocular depth model to create an initial 3D point cloud.

More specifically, not wanting to start off on rocky beginnings, they initialize the scene using a pretrained 2D prior at a predefined pose, or in other words, a generated 2D image at a specific place within a larger (eventual) scene. In the examples shown, they are either using Stable Diffusion XL, Adobe Firefly, or DALLE-3. They then use a monocular depth estimator combination of Marigold and DepthAnything to convert it to 3D.

These two together help inform the initial point cloud. However, from this, there is a wide variety of potential outputs and scenarios that can be created. This means they also have the possibility of creating a bad initial starting point. They raise that initial floor, by outpainting the original generated image to extend the original point cloud view points. They use Stable Diffusion to continue to fill in discovered gaps. This step is critical to the success of RealmDreamer.

The final touch in RealmDreamer’s process is a finetuning phase that sharpens details and ensures the cohesiveness of the scene. This phase uses a text-to-image diffusion model personalized for the input image, ensuring that every aspect of the scene—from the textures to the lighting—matches the original textual prompt.

You might be interested to know that the inpainting and the finetuning stages are conducted within Nerfstudio. They use the original implmentation of Gaussian Splatting from Inria, but perhaps this could be officially supported within Nerfstudio in the coming months? In theory, you could generate an entire scene using RealmDreamer and then potentially build individual elements with SigNeRF, all without ever leaving the Nerfstudio ecosystem.

End to end it takes roughly 10 hours to run the entire generation, but encouragingly it can be accomplished on a single GPU with 24GB of VRAM, opening the door for the upper end of consumer GPUs. Like with all of Generative AI, it will only rapidly progress in both fidelity and speed. This seems like a good starting place for scene level generations. I also imagine that as diffusion based methods increase in fidelity and view consistency, 3D will be a direct benefactor, allowing for more and more hyper realistic outputs.

Check out the RealmDreamer's Project Page for more information and even more examples!

There's so much happening right now and while the majority of generative 3D has been on individual objects thus far, I would not discount the progress that is being made publicly through research and quietly behind closed doors.