With ICCV behind us and the ton of NeRF papers that were shown, one seriously caught my eye. It was one of the finalists for best paper (along with Zip NeRF) and for a good reason. The paper is called Tri-MipRF; now let me tell you why.

Let's go backwards a bit to 2021. Mip NeRF really stepped up the quality output possibility of NeRFs. The drawback? You had a to wait a couple days for it to finish. Then we had Instant-NGP which opened the floodgates to consumer NeRFs, albeit with a lower quality than Mip-NeRF. Chasing after the grail of fast NeRFs that have high quality outputs has been an elusive goal.

Well a major problem for getting those high quality and speed NeRFs is accounting for an efficient anti-aliasing representation. This is where Tri-MipRF comes in. What Tri-MipRF is able to do is they're able to achieve Instant NGP speed, with higher than Mip NeRF reconstruction quality. Oh man. Here we go! This is so exciting to write and even more to read this paper.

This combination of speed and accuracy might start to sound like Google's Zip NeRF. In fact, Zip NeRF attempts to solve the same exact problem, but it introduces a multi-sampling-based method while Tri-MipRF utilizes a pre-filtering-based method.

Check out this quick comparison video:

Instant-NGP on the left. Tri-MipRF on the right.

Wow, I need to calm down. Ok, it looks great. How can this be possible?

A central issue of high quality representations lie in the lack of a representation to support efficient area sampling. Two strategies– super sampling and pre-filtering (also known as area-sampling) – have traditionally been employed to address this problem. However, these strategies tend to be computationally intensive and may conflict with the goals of efficiency. Tri-MipRF starts by cone casting (instead of a ray) from the camera's projection center to the image plane, where a pixel is treated as a disc.

This disc's radiance is captured by sampling spheres inside this cone, which is labeled C above. Traditional NeRF approaches render a pixel by emitting a single ray, otherwise known as ray casting. But this isn't ideal for real-world imaging sensors. Tri-MipRF emits a cone for each pixel, ensuring accurate sampling for pixels at different distances from the camera. This approach emulates real-world imaging better, as it samples multiple points along the cone using spheres, effectively capturing the area of the pixel. The method also efficiently skips unnecessary samples in empty areas, saving computation.

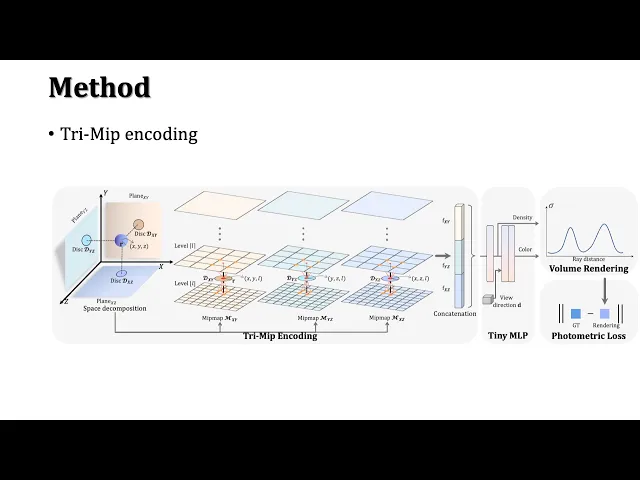

The Tri-MipRF encoding then featurizes these spheres into feature vectors using three mipmaps. The purpose of the Tri-Mip encoding is to maintain rendering details while optimizing reconstruction and rendering efficiency. This is achieved using three mipmaps, which decompose the 3D space into three planes (XY, XZ, YZ). Each plane is represented by a mipmap that models the pre-filtered feature space.

When querying features corresponding to a sampled sphere, the method orthogonally projects the sphere onto each of the three planes. The features are fetched using trilinear interpolation, ensuring high precision in the resulting features.

A tiny MLP (Multi-Layer Perceptron) then maps these feature vectors and the view direction to the density and color of the spheres. Finally, the volume rendering integral is used to determine the final color of the pixel based upon the viewing direction.

We also develop a hybrid volume-surface rendering strategy to enable real-time rendering on consumer-level GPUs, e.g., 60 FPS on an Nvidia RTX 3060 graphics card.Tri-MipRFTweet

The final step in the process optimizes the rendering speed. While volume rendering provides the necessary radiance details, it can be computationally heavy. By using a hybrid approach that combines volume and surface rendering, the method achieves faster rendering times. A proxy mesh, derived from the reconstructed density field, assists in determining the approximate distance from the camera to the object. This mesh aids in efficiently rasterizing the image and determining hit points on the object surface.

Tri-MipRF reconstructs radiance fields from calibrated multi-view images of static scenes efficiently. This approach provides high-fidelity renderings that exhibit fine-grained details in close-up views and eliminates aliasing in distant views.

Tldr of how Tri-MipRF works:

Cone vs. Ray Casting: Treating pixels as discs and using cones rather than rays allows for more accurate rendering, especially when considering varied distances.

Tri-Mip Encoding: This novel encoding method breaks the 3D space into three planes, each represented by a mipmap. It provides an efficient way to capture and represent 3D space details, ensuring that the reconstructed scenes look coherent at varying distances.

Hybrid Rendering: By combining volume rendering (for radiance details) with surface rendering (using a proxy mesh for speed), this method achieves faster rendering speeds, which is vital for real-time applications.

The Tri-MipRF method, through these techniques, bridges the gap between computational efficiency and high-fidelity renderings. It presents a more practical approach to scene reconstruction and rendering, especially in applications where speed is a crucial factor.

It's pretty amazing that we're starting to see publicly available Zip-NeRF quality with the same speed as Instant NGP. The above graph says it all. There are so many useful ways this can be applied to existing NeRF methods. I'm hoping that researchers and teams will see this and begin to try it out for themselves. It would be amazing to see this appear as a training method with nerfstudio. It's really exciting progress for NeRFs that yields higher fidelity with even faster generation. Oh and by the way. All of this comes with a 25% reduction in file size.

I would love to see a head to head comparison of Tri-MipRF to Zip NeRF. Zip NeRF unfortunately remains without its code being publicly published, while Tri-MipRF is now open sourced and can be viewed here.