Katrin Schmid

Mar 2, 2023

TLDR; History of Neural Radiance Fields

Lightfield and hologram capture started with a big theoretical idea 115 years ago and we have struggled to make them viable ever since. Neural Radiance fields aka NeRF along with gaming computers now for the first time provide a promising easy and low cost way for everybody to capture and display lightfields.

Neural Radiance fields (NeRF) recently had its third birthday but the technology is just the latest answer to a question people have been chasing since the 1860s: How do you capture and recreate space (from images)?

This is no way an authoritative version, we are happy people to contribute. Let's keep it short and relevant.

Early photography and photosculpture, ca 1850

Only a few years after (black and white) photography was invented in England 1851 people took the idea further. Aerial photogrammetry with balloons started and with it the idea of having a virtual camera by combining multiple pictures was born.

Also, in France during the mid-to-late 1800s, one could go and sit for a “photosculpture” (pictured below). In a photo session consisting of 24 cameras arranged in a circle around the subject a 3D reproduction of the photographed subject was reproduced. They would take simultaneously multiple photos of the subject, each from a different regular angle. Then the photos would be traced and each profile cut from thin slices of wood. These wood slices were then assembled into a radial pattern matching the positions from which the original photos were taken. Once the wood model was finished, clay and other materials provided gap-filling and details were added by hand.

While aerial photogrammetry remained viable ever since its invention, photosculptures grew out of fashion within a couple of years.

But the central concept that a 3D figure can be adequately represented by a series of structured 2D representations is remarkably similar to how we record NeRFs now.

The Theory: The plenoptic function and lightfields 1908

Practitioners had the idea first but even the theoretical foundation for NeRF dates back to 1908. Nobel laureate physicist Gabriel Lippmann proposed eight dimensional Light-field capture which, unlike rendering in computer graphics, is more inspired by faceted insect eyes than human vision. He was well ahead of his time and probably never got to see his idea in practice, instead he contributed to early color photography.

The math looks a bit scary but it's not that complicated and it will get us to NeRF’s equation eventually.The plenoptic function describes the degrees of freedom of a light ray as wave with the parameters: Irradiance (aka brightness), position, wavelength (aka color), time, angle, phase, polarization and bounce. The plenoptic function measures physical light properties at every point in space and it describes how light transport occurs throughout a 3D volume.

A simplification: Light fields, 1936

An eight dimensional is a lot of data to capture so Arun Gershun came up with a simplification for space free of occluders. The light field, first described in 1936, is defined as radiance as a function of position and direction in regions of space free of occluders. This formulation has been used by practitioners.

A light field is a function that describes how light transport occurs throughout a 3D volume. It describes the direction of light rays moving through every x=(x, y, z) coordinate in space and in every direction d, described either as θ and ϕ angles or a unit vector. Collectively they form a 5D feature space that describes light transport in a 3D scene.

The simplified light field equation:Polarization and Bounce are often omitted for simplicity. The full equation is also time dependent. Time is not needed for static scenes.

The magnitude of each light ray is given by the radiance and the space of all possible light rays is given by the five-dimensional plenoptic function. The 4D lightfield has 2D spatial (x,y) and 2D angular (u,v) information that is captured by a plenoptic sensor.

Another noteworthy advance was the first AI programs were written in 1951 in England: a checkers-playing program and a chess-playing program.

Lasers, holograms and computer graphics, the 1960-1980ies

So what can we do with all these equations? A hologram is a photographic recording of a light field, rather than an image formed by a camera lens.

The development of the laser enabled the first practical optical holograms that recorded 3D objects to be made in 1962 in the Soviet Union and USA. Holography has been widely referred to in movies, novels, and TV, usually in science fiction, starting in the late 1970s, probably most notable in Star Wars.

Because Holograms are still expensive and difficult to record and display they have found less applications in real life so far.

The 1980ies also saw home computers, digital cameras and computer graphics invented. In computer graphics, the rendering equation (1986) is an integral equation in which the equilibrium radiance leaving a point is given as the sum of emitted plus reflected radiance under a geometric optics approximation. It describes physical light transport for a single camera or the human vision. It is a simplification of the plenoptic light transport that describes general light transports. Until recently we did not think that the process of creating an image from a scene is reversible.

Lightfield camera arrays, image-based-rendering and bullet time, 2000

Apple Quicktime VR (1995) was an early commercial product that enabled the viewing of objects photographed from multiple angles but was discontinued after a few years.

Film visual effects were another early adopter of a simplified lightfield workflow, also known as the time-slice or “bullet time”, created using multiple cameras to give the impression of time slowing down or standing still all together. The Matrix movie 1999 used it to great commercial success.

The camera setup is identical to what the photosculpture process above used. In both we are using multiple cameras to make a virtual camera fly around the subject in the process.

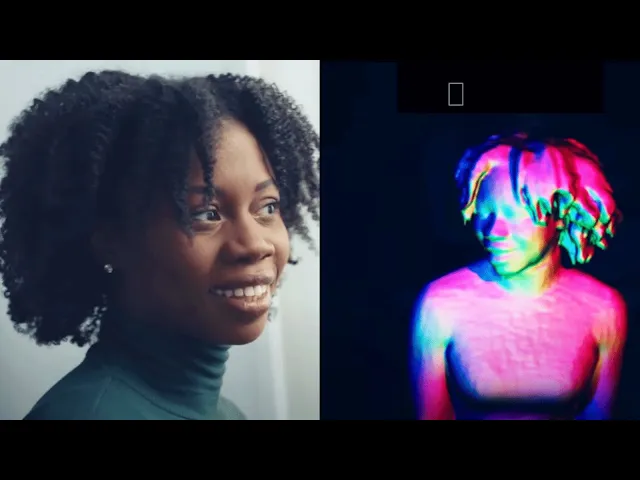

Somebody already used NeRFs to make bullet time recently, noticeable he could do so with only 15 phone cameras for the moving subjects.

Light fields were introduced into computer graphics relatively late in 1996 by Marc Levoy and Pat Hanrahan. Their proposed application was image-based-rendering - computing new views of a scene from pre-existing views without the need for scene geometry. There were early ideas of interpolating captured lightfield views but neural networks and computing were not available and computer graphics had largely developed around the idea of meshes. The expensive custom synchronized camera arrays needed to capture lightfields made the process out of reach for anyone but large university and research departments.

Paul Debevec, a computer graphics researcher, must be credited with trying to bridge the gap between research and film effects in simplifying lightfields graphics. He developed custom capture and relighting devices for that purpose but requiring custom hardware made adoption challenging.

In a brief wave of public excitement a startup called Lytro offered the first consumer light field camera in 2014. The "Lytro Illum" pictured below. The product was not a huge commercial success and the company discontinued operation. Most Lightfield cameras are still expensive and impractical and produce large amounts of data. The research results did not have wide adoption and the field would become fairly niche for the next few years.

In the mid 2010s AI and computers had progressed to the point where deep fakes were possible. Neural rendering more generally is a method, based on deep neural networks and physics engines, which can create novel images and video footage based on existing scenes.

Is a relatively new technique that combines classical or other 3D representation and renderer with deep neural networks that re-renders the classical render into a more complete and realistic views. In contrast to Neural Image-based Rendering (N-IBR), neural re-rendering does not use input views at runtime, and instead relies on the deep neural network to recover the missing details.

Deepfakes are an early neural rendering technique in which a person in an existing image or video is replaced with someone else's likeness. The original approach is believed to be based on Korshunova et al (2016), which used a convolutional neural network (CNN).

Neural rendering allows us to reverse the process of rendering going from a model to pictures and back.Inverse Rendering refers to estimation of intrinsic scene characteristics given a single photo or a set of photos of the scene. While predicting these characteristics from 2D projects is highly underconstrained, recent advances in this topic have made a big step in solving this problem. Another breakthrough.

Generative AI, inverse rendering: NeRF, 2020

So some 112 years after the plenoptic equation was first written down Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng published Neural Radiance Fields (NeRF), a learned continuous 5D lightfields in 2020. It presents a method that achieves state-of-the-art results for synthesizing novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views.

The key idea in NeRF is to represent the entire volume space with a continuous function, parameterized by a multi-layer perceptron (MLP), bypassing the need to discretize the space into voxel grids, which usually suffers from resolution constraints. It is a neural rendering technique that allows real-time synthesis of photorealistic new views and no special cameras or hardware is necessary. Unlike photogrammetry NeRF can handle reflection and transparent objects, small details, fuzzy objects. An important advantage is that it needs less and lower resolution photos for reconstruction (50-150 images) than previous approaches due to the neural network interpolating views.

Notice the input image resembling a light field capture

NeRF math should look familiar to you by now

Unfortunately, the process of creating a Neural radiance field this way was still slow, requiring expert knowledge and days of computing. Their breakthrough research was initially not noticed much outside of expert circles.

Realtime NeRF: NVIDIA Instant-NGP 2022

Only two years later Thomas Müller, Alex Evans, Christoph Schied, Alexander Keller at NVIDIA enabled real time NeRF rendering on gaming PCs when they published “Instant Neural Graphics Primitives with a Multiresolution Hash Encoding”.

This time the technology was ready for the use of consumers and companies and got some more press and prices.

At the same time we saw the first public use of NeRF rendering in commercial mapping:

Apple fly over show selected landmarks as NeRFs in full 3d now.

Also, NeRF co-inventor Matthew Tancik started open source library project Nerfstudio to make the technology more accessible.

It is still early days for NeRF but we have come some way to be here.

Future

So what's next for NeRF? Here are my bets:

NeRF rendering will com to web browsers and integrations with game engines and traditional computer graphics software

Large scale maps made from NeRF will come to commercial and custom maps

We have time dynamic NeRFs

Editable NeRFs just like graphics scene with shader, geometry changes, relighting and composable

NeRFs made from generative ai instead of capture data or captured NeRF modified by prompts

Video conferencing with NeRF and something like real time google earth is already technically possible but limited by the need custom hardware, which in the past has shown that as unfavorable factor but never say never.

Google project Starline

Links and image sources

Early photography and photosculpture, ca 1850

The Theory: The plenoptic function and lightfields 1908. Lasers, holograms and computer graphics, the 1960-1980ies

Lightfield camera arrays, image-based-rendering and bullet time, 2000

https://beforesandafters.com/2021/07/15/vfx-artifacts-the-bullet-time-rig-from-the-matrix

https://labs.laan.com/blogs/slow-motion-bullet-time-with-nerf-neural-radiance-fields/

https://labs.laan.com/blogs/slow-motion-bullet-time-with-NeRF-neural-radiance-fields/

First NeRF paper, 2020

Realtime NeRF: NVIDIA instant-ngp 2022

Future

Trending Articles

- TRENDINGLoading...

- TRENDINGLoading...

- TRENDINGLoading...