NVIDIA Publishes Blog on Marble Workflow for Robotics Simulation with Isaac Sim

Michael Rubloff

Dec 18, 2025

NVIDIA has published a new end-to-end tutorial demonstrating how World Labs’ Marble can be integrated directly into Isaac Sim, positioning generative world models as a practical tool for robotics simulation rather than a speculative one.

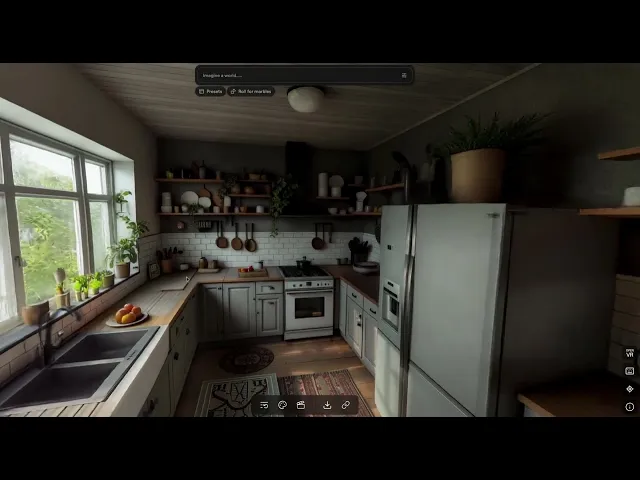

As a quick refresher, Marble is the first publicly available model from World Labs. Using either a reference image or a text prompt, users can rapidly generate an explorable 3D scene rendered using Gaussian splatting, with the ability to export those worlds for downstream workflows.

The NVIDIA article walks through a complete pipeline that takes a Marble generated environment, exported as Gaussian splats alongside a collider mesh, and brings it into Isaac Sim using NVIDIA Omniverse NuRec and USD.

The workflow itself is notably straightforward, and it illustrates well why the industry has become increasingly excited about gaussian splatting for simulation based pipelines. In NVIDIA’s approach, environments are sourced from the public Marble gallery, where worlds can be generated from text prompts, images, video, or coarse 3D structure.

Each scene is exported in two complementary forms. The standard .ply file containing the Gaussian splats for visual fidelity, and a GLB collider mesh that provides the geometry required for physics and collision handling. Downloading custom scenes requires a paid World Labs subscription, though sample assets are available. Alternatively, you can also use something like NVIDIA's Lyra or Hunyuan's World 1.0.

To bring the splats into Isaac Sim, NVIDIA leverages Omniverse NuRec, which the company first introduced at SIGGRAPH earlier this year. The Marble generated .ply file is converted into USDZ using a conversion script from NVIDIA’s 3DGRUT library. In this process, the Gaussian splats are represented as a volumetric USD primitive, allowing Omniverse to render them efficiently while keeping them fully embedded in the USD scene graph.

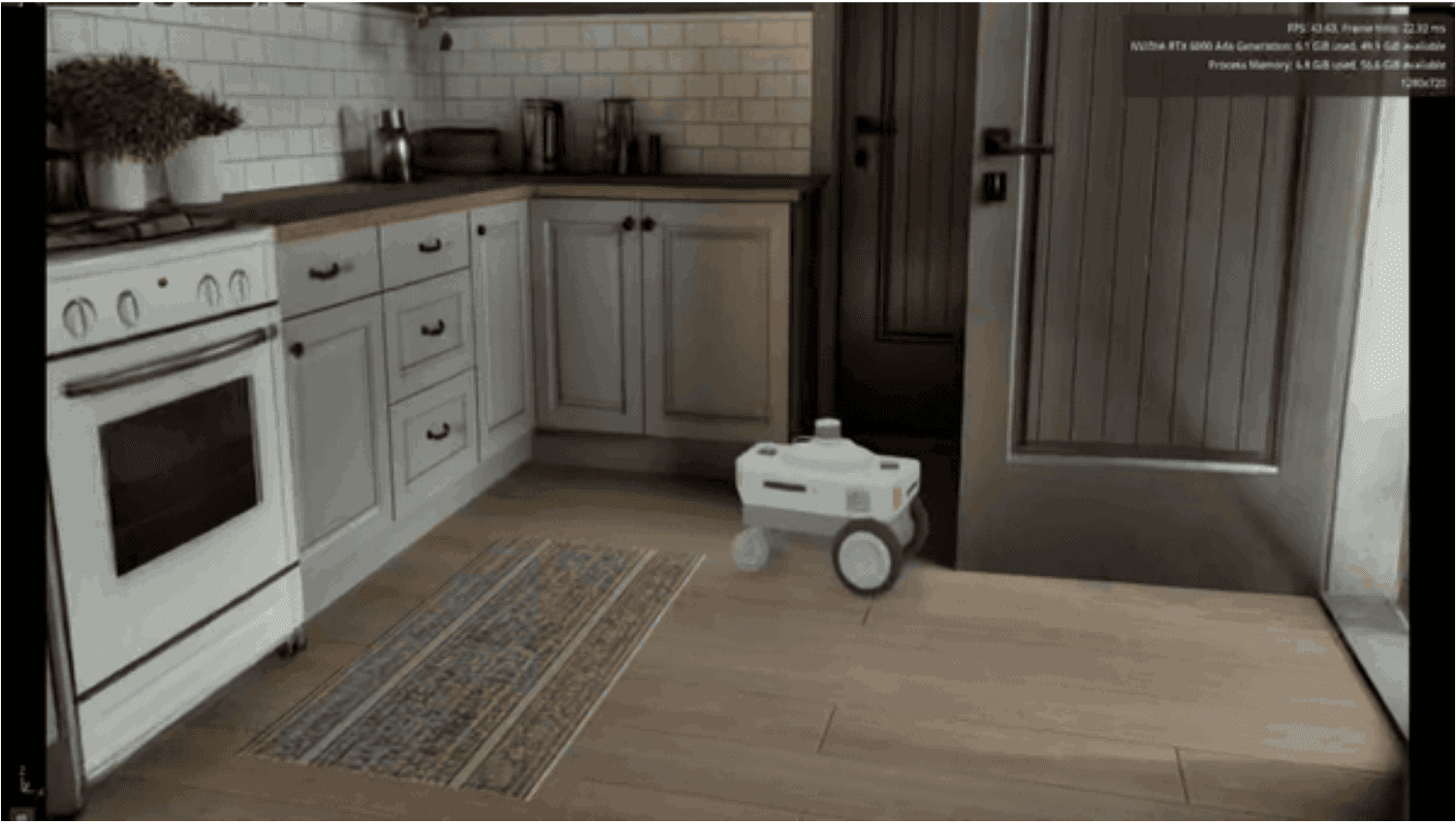

Once imported into Isaac Sim, the radiance field is aligned and scaled to real world dimensions. NVIDIA’s tutorial walks through grounding the scene, correcting orientation, and validating scale using simple reference geometry.

From there, physics and lighting are layered into the environment. A ground plane is configured as a matte object to properly receive shadows, while dome lighting is added to establish consistent global illumination. The radiance field is then linked to physical proxy geometry so that lighting and shadow interactions behave as expected.

The collider mesh exported from Marble is imported next, aligned with the splat volume, and enabled as a physics collider. While invisible in the final render, it governs how robots interact with surfaces such as floors, counters, and furniture.

At this stage, the environment behaves like a traditionally hand modeled simulation asset, despite having been generated by an AI model. To validate the setup, NVIDIA drops a Nova Carter robot into the scene, assigns a differential controller, and drives it through the kitchen environment using keyboard input. The robot respects collisions, rests correctly on the floor, and interacts naturally with the space.

From Isaac Sim’s perspective, the Marble generated world is no different from a manually authored USD environment. It’s becoming increasingly common to see generative worlds slot directly into robotics workflows. For robotics teams, this unlocks faster iteration, greater environment diversity, and more scalable sim to real testing. For the radiance field community, it marks another expansion of gaussian splats beyond visualization and capture, into physics-driven, embodied AI development.

Read the original NVIDIA blog here.