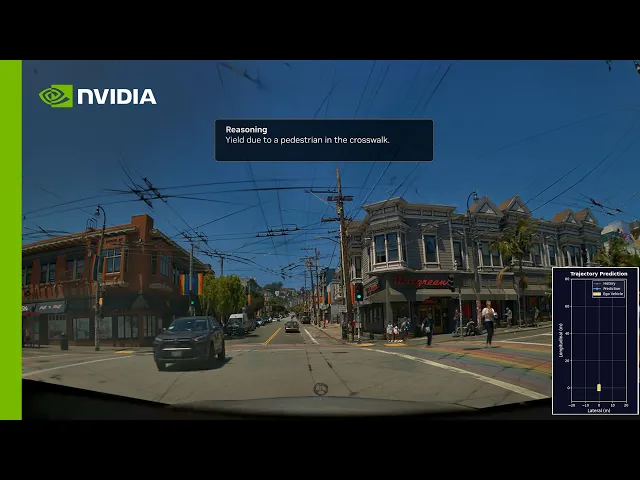

At CES, NVIDIA introduced a new autonomous driving stack under the name Alpamayo, positioning it as the company’s first open, reasoning based vision language action model aimed squarely at addressing longtail problems. While much of the headline attention has gone to Alpamayo 1 itself, the more consequential development for radiance field practitioners sits slightly lower in the stack. Beneath the hood, NVIDIA also unveiled AlpaSim, an open source autonomous vehicle simulator whose sensor realism is built on NuRec, NVIDIA’s neural rendering system utilizing gaussian splatting variant, 3D gaussian unscented transform (3DGUT).

AlpaSim, the open source simulator released alongside Alpamayo 1, signals how NVIDIA believes autonomous systems should be tested, stressed, and ultimately trusted and it places radiance fields, via 3DGUT, squarely at the center of that vision.

AlpaSim is organized around the three priorities of sensor fidelity, horizontal scalability, and hackability for research. It suggests that for the next generation of reasoning based AV systems, visual and semantic realism matter significantly and that the ability to scale experiments and interrogate behavior.

Those priorities are reflected directly in AlpaSim’s architecture. The simulator is implemented as a collection of Python based microservices that communicate exclusively via gRPC. At the center sits the runtime, which maintains the world state and orchestrates the simulation loop. Surrounding it are independent services for neural rendering, traffic behavior, vehicle control, physics constraints, policy inference, and evaluation. Each service can be replicated independently, allowing researchers to scale compute precisely where it is needed.

The neural rendering engine, or NRE, is where radiance fields enter the picture. Built on NVIDIA’s NuRec system, the NRE uses Gaussian splatting to generate photorealistic camera views from reconstructed driving scenes. Rather than relying on handcrafted assets or synthetic textures, AlpaSim renders sensor data directly from neural scene representations derived from real world captures. They reconstruct a stream of camera frames that preserve the visual complexity of real environments, while still allowing novel viewpoints and repeatable closed loop testing. For perception and end to end policy research, this helps bridge a long standing gap between static datasets and fully synthetic worlds.

The simulation loop itself is designed to be transparent and inspectable. A configuration wizard initializes scenarios and launches services. The runtime tracks the evolving world state, which is passed simultaneously to the neural renderer for ego vehicle sensor generation and to the traffic simulator, which actuates non ego agents such as vehicles and pedestrians. The ego policy consumes the rendered sensor data and outputs a planned trajectory. That trajectory is processed by a controller and simplified vehicle model, passed through a physics module that enforces ground constraints, and then fed back into the runtime as an updated state. Throughout this process, detailed logs are produced, persisting beyond the simulation run and enabling offline evaluation through a dedicated metrics module.

By decoupling metrics computation from simulation execution, AlpaSim allows researchers to replay, analyze, and compare behaviors at scale. This separation aligns with NVIDIA’s broader argument that reasoning based AV models cannot be adequately assessed through open loop benchmarks alone. When models generate explicit reasoning traces and long horizon plans, the testing infrastructure must be able to probe those decisions across diverse and rare scenarios.

Within the Alpamayo ecosystem, AlpaSim plays a specific role. Alpamayo 1, the 10B parameter reasoning VLA model released alongside it, is positioned as a teacher rather than a production driver. AlpaSim then becomes the environment where those distilled policies are validated, stress tested, and compared, using neural reconstructions of real driving data and a scalable, repeatable testing harness. Together with NVIDIA’s Physical AI AV datasets, this forms a self-reinforcing loop.

For the radiance field community, AlpaSim represents a meaningful expansion of 3DGUT's role in industry. What began as a technique for novel view synthesis and visualization is now being treated as foundational sensor infrastructure for physical AI. By embedding neural rendering directly into the autonomy development stack, NVIDIA is effectively betting that radiance fields will become a standard tool not just for seeing the world, but for teaching machines how to act within it.

If Alpamayo marks NVIDIA’s attempt to bring chain of thought reasoning into autonomous driving, AlpaSim is the substrate that makes that ambition testable. Alpamayo and AlpaSim are open sourced and permissibly licensed.